If your robot has cameras with high resolution, you need to compress and to stream their images.

Collaboration diagram for Gstreamer carrier:

Collaboration diagram for Gstreamer carrier:If your robot has cameras with high resolution, you need to compress and to stream their images.

In order to achieve this, we use Gstreamer, a free framework for media applications.

This document contains a brief introduction to Gstreamer tool and explains how the high-resolution cameras stream is encoding and how a yarp application can read it.

Gstreamer is a free framework for media applications; it provides a set of plugins that let the user to build applications by connecting them as in a pipeline. It has been ported to a wide range of operating systems, compilers and processors, including Nvidia GPUs.

A Gstreamer application is composed by a chain of elements, the base construction block of a Gstreamer application. An element takes an input stream from previous element in the chain, carries out its function, like encode, and passes the modified stream to the next element. Usually each element is a plugin.

The user can develop application in two way: the first consists in write an application in c/c++, where the elements are connected using API, while the second uses the gst-launch command-line tool. In the following an example of how to use gst-launch command:

gst-launch-1.0 -v videotestsrc ! ‘video/x-raw, format=(string)I420, width=(int)640, height=(int)480’ ! x264enc ! h264parse ! avdec_h264 ! autovideosink

This command creates a source video test with the properties specified in this string “video/x-raw, format=(string)I420, width=(int)640, height=(int)480”; after it is encoded in h264, then decoded and shown. Each element of this pipeline, except the property element, is plugins dynamically loaded. The videotestsrc element lets the user to see a stream without using camera.

The previous command works on Linux, but since Gstreamer is platform independent, we can launch the same command on Windows taking care to change only hardware dependent plugin. So the same command on Windows is:

gst-launch-1.0 -v videotestsrc ! “video/x-raw, format=(string)I420, width=(int)640, height=(int)480” ! openh264enc ! h264parse ! avdec_h264 ! autovideosink

It’s important to notice that the changed element is the encoder (openh264enc), while the decoder is the same. This because the decoder belongs to the plugin that wraps libav library, a cross-platform library to convert stream in a wide range of multimedia formats. [see References chapter]

Please see Notes section about commands in this tutorial.

The server grabs images from cameras, so it needs to run on where cameras are connected. The server is a Gstreamer command pipeline, while the client could be a yarp or a Gstreamer application connected to the robot’s network.

The server application consists in the following Gstreamer command:

gst-launch-1.0 -v v4l2src device="/dev/video1" ! ‘video/x-raw, width=1280, height=480, format=(string)I420’ ! omxh264enc ! h264parse ! rtph264pay pt=96 config-interval=5 ! udpsink host=224.0.0.1 auto-multicast=true port=33000

The client can read the stream using Gstreamer native command:

gst-launch-1.0 -v udpsrc multicast-group=224.0.0.1 auto-multicast=true port=3000 caps="application/x-rtp, media=(string)video, encoding-name=(string)H264, payload=(int)96" ! rtph264depay ! h264parse ! avdec_h264 ! autovideosink

gst-launch-1.0 -v udpsrc port=33000 caps="application/x-rtp, media=(string)video, encoding-name=(string)H264, payload=(int)96" ! rtph264depay ! h264parse ! avdec_h264 ! videocrop left=10, right=30, top=50, bottom=50 ! autovideosink

Currently we are using 1.24.4 version.

sudo apt-get install libgstreamer1.0-dev \ libgstreamer-plugins-base1.0-dev \ gstreamer1.0-plugins-base \ gstreamer1.0-plugins-good \ gstreamer1.0-plugins-bad \ gstreamer1.0-libav \ gstreamer1.0-tools

You need to download both the main package and the devel package from here: https://gstreamer.freedesktop.org/data/pkg/windows/

Installation of package Gstreamer:

Installation of grstreamer devel package:

You can verify the installation by running a simple test application composed by a server and a client :

gst-launch-1.0 -v videotestsrc ! "video/x-raw, format=(string)I420, width=(int)640, height=(int)480" ! openh264enc ! h264parse ! rtph264pay pt=96 config-interval=5 ! udpsink host=<YOUR_IP_ADDRESS> port=<A_PORT_NUMBER>

gst-launch-1.0 -v udpsrc port=<A_PORT_NUMBER> caps="application/x-rtp, media=(string)video, encoding-name=(string)H264, payload=(int)96" ! rtph264depay ! h264parse ! avdec_h264 ! autovideosink

If you want use a yarp application to read the stream you need to:

yarp name register /gstreamer_src gstreamer <SERVER_IP_ADRESS> <SERVER_IP_PORT>

set GSTREAMER_ENV=udpsrc port=<A_PORT_NUMBER> caps="application/x-rtp, media=(string)video, encoding-name=(string)H264, payload=(int)96" ! rtph264depay ! h264parse ! avdec_h264on linux:

export GSTREAMER_ENV="udpsrc port=15000 caps=\"application/x-rtp, media=(string)video, encoding-name=(string)H264, payload=(int)96\" ! rtph264depay ! h264parse ! avdec_h264"

yarpview --name /view

yarp connect /gstreamer_src /view gstreamer+pipelineEnv.GSTREAMER_ENV

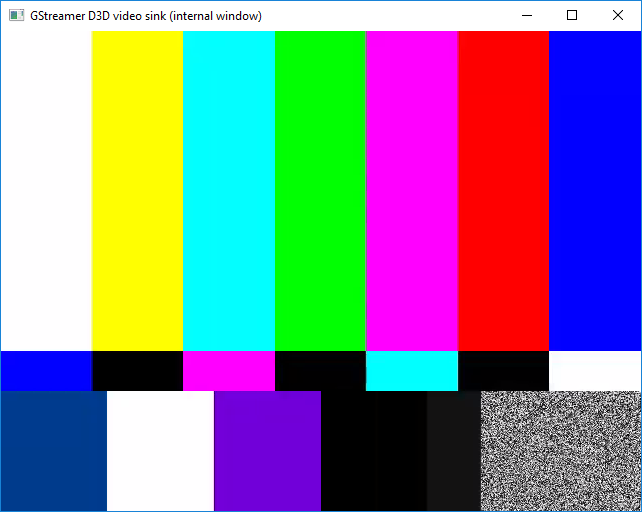

Now on yarp view you can see the following image, where in the bottom right box there is snow pattern.

Yarp Gstreamer Plugins. They also allow to feed a yarp image inside a gstreamer pipeline. See documentation: Gstreamer plugins[1] Gstreamer documentation https://gstreamer.freedesktop.org/documentation/

[2] Gstreamer plugins https://gstreamer.freedesktop.org/documentation/plugins.html

[3] Libav library documentation https://www.libav.org/index.html

[4] Gstreamer Libav plugin https://gstreamer.freedesktop.org/modules/gst-libav.html

[5] h264 yarp plugin http://www.yarp.it/classyarp_1_1os_1_1H264Carrier.html